# pix2pixHD

### [[Project]](https://tcwang0509.github.io/pix2pixHD/) [[Youtube]](https://youtu.be/3AIpPlzM_qs) [[Paper]](https://arxiv.org/pdf/1711.11585.pdf)

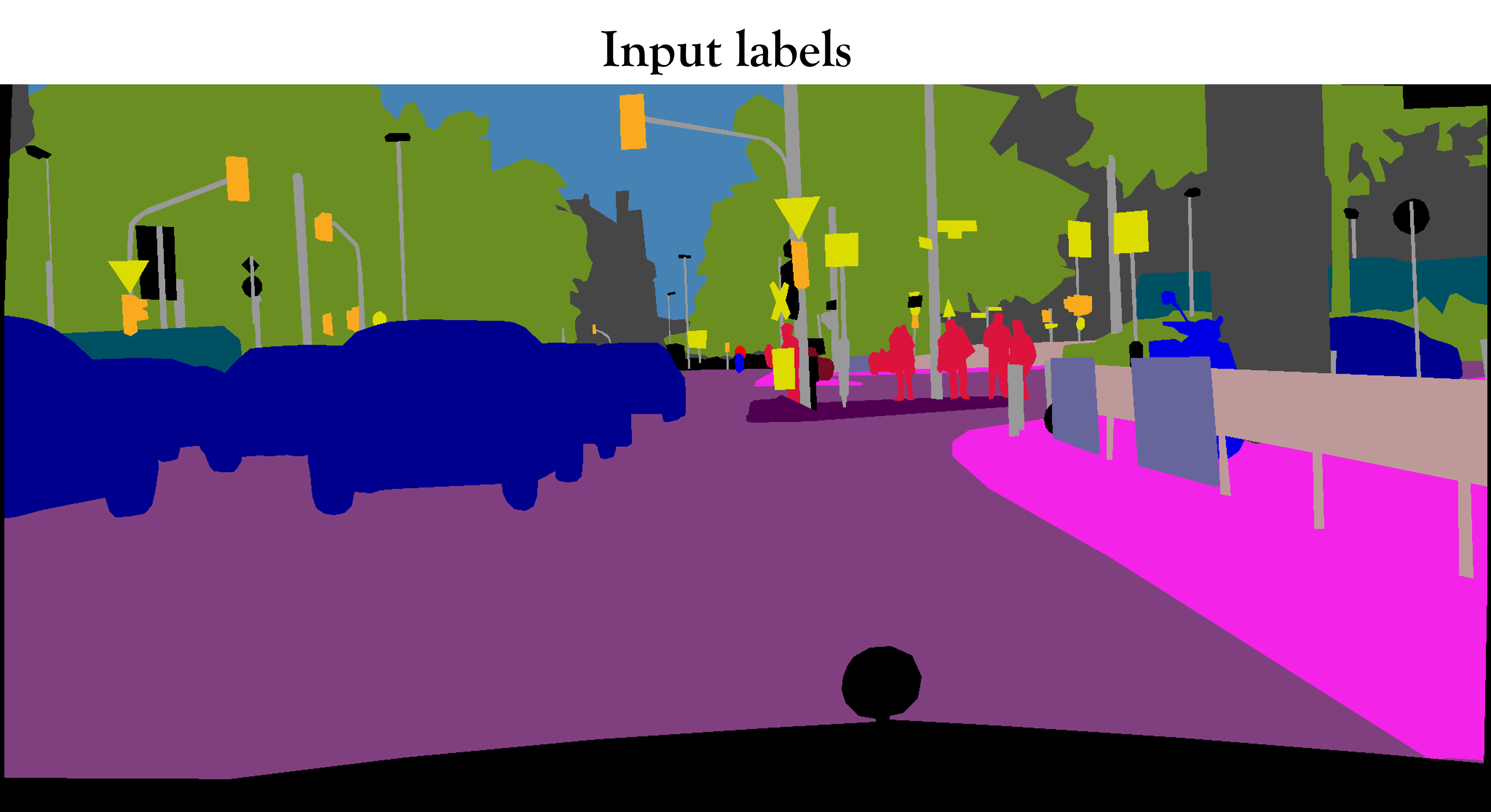

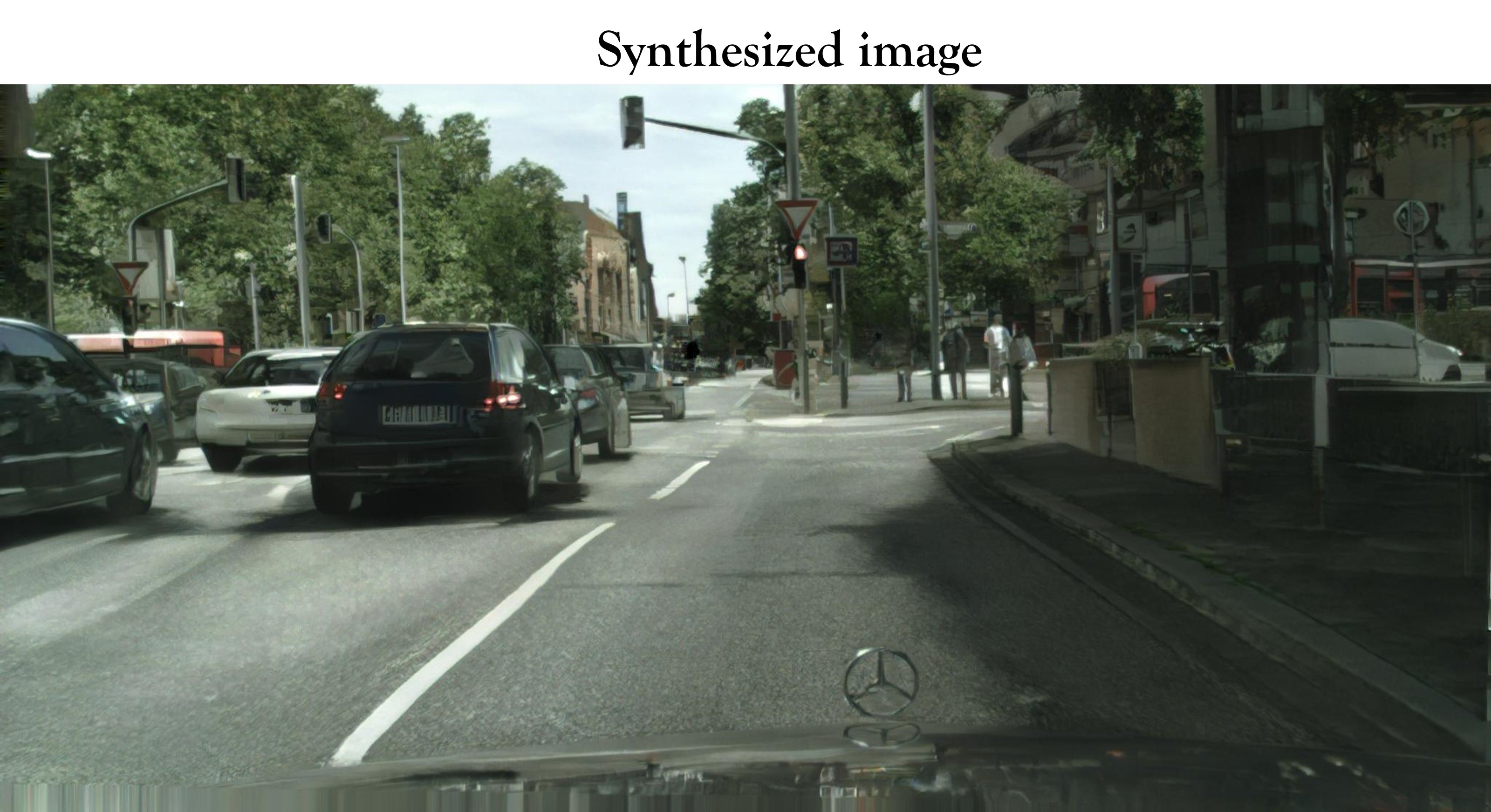

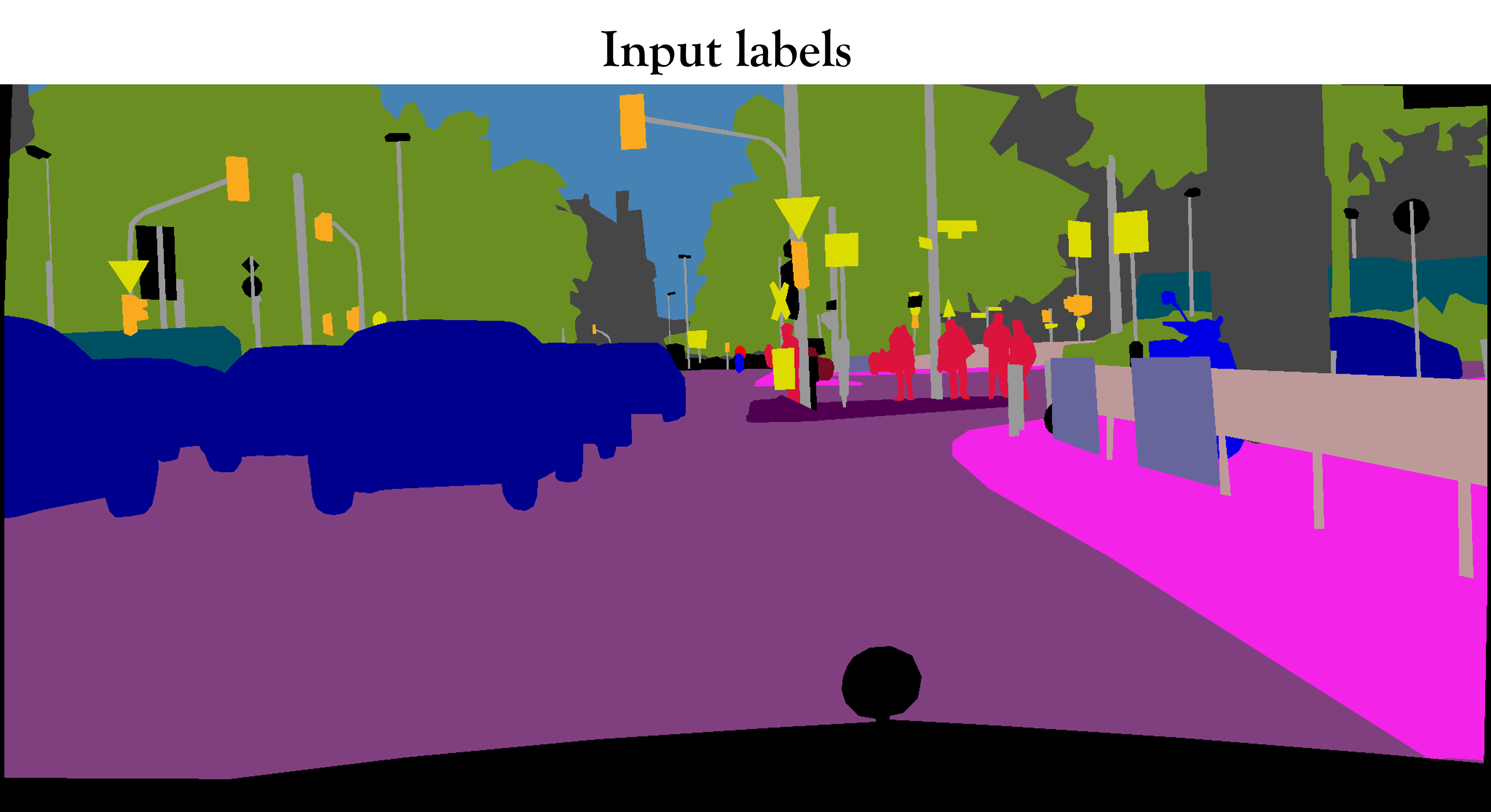

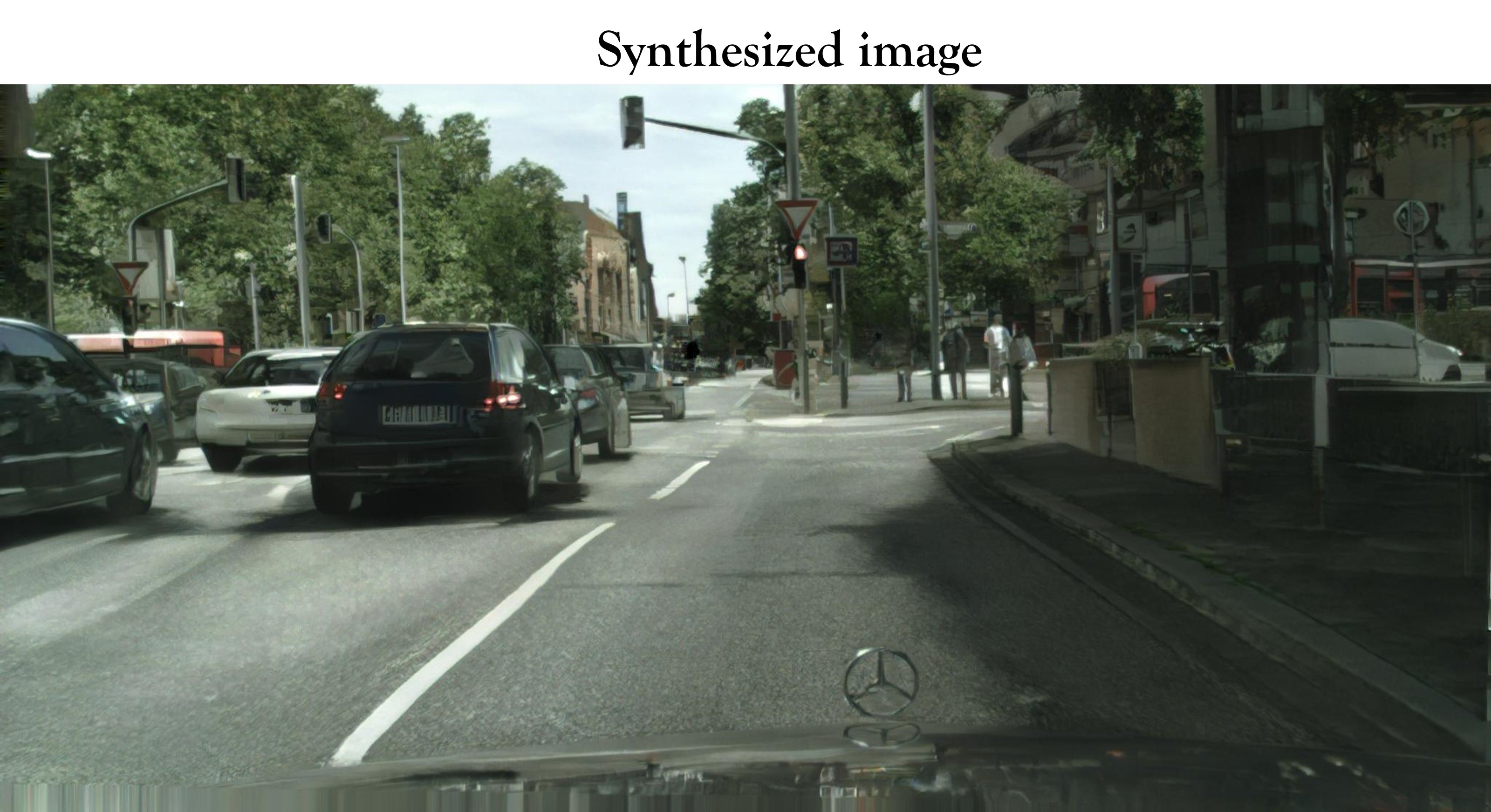

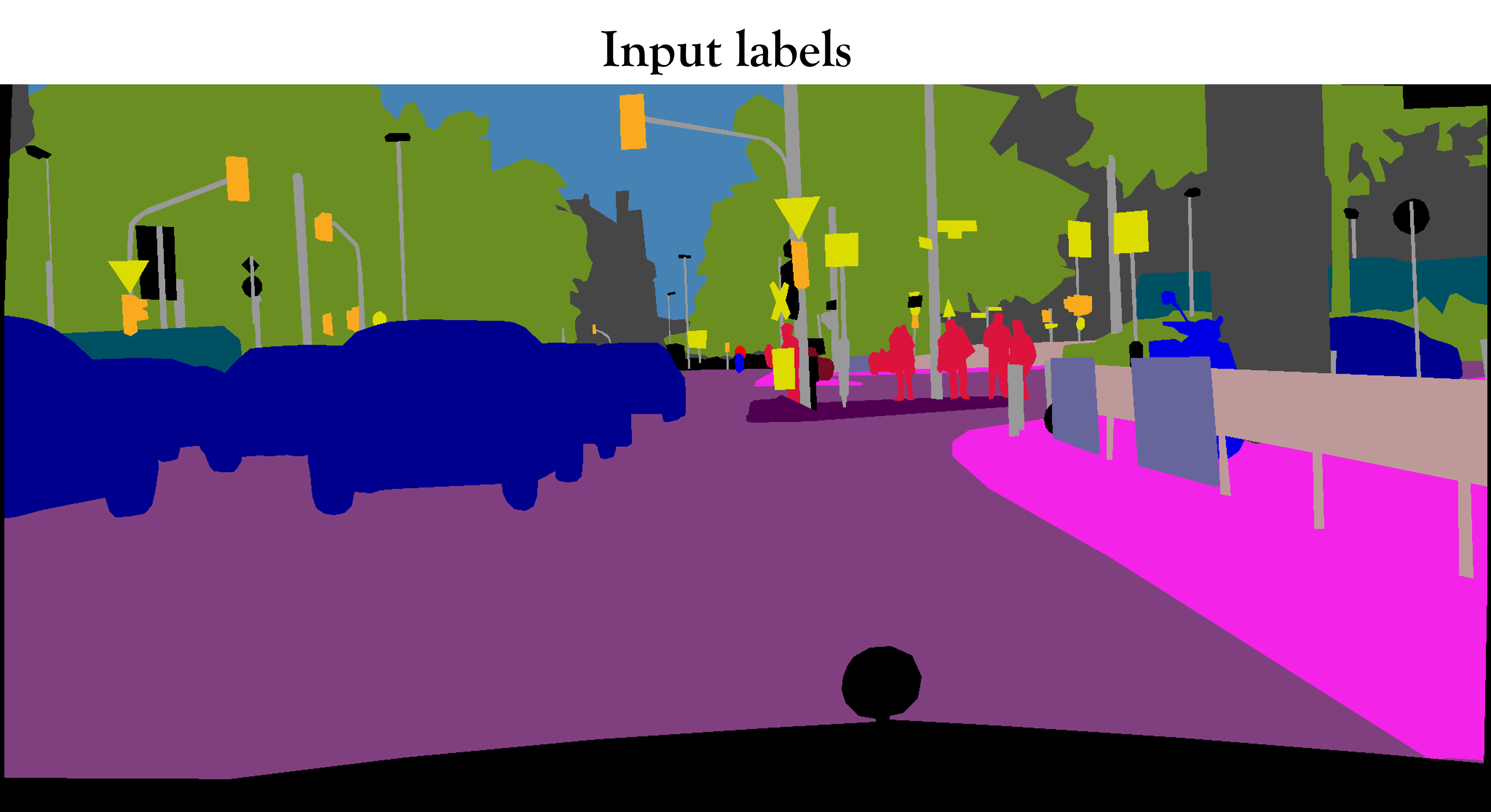

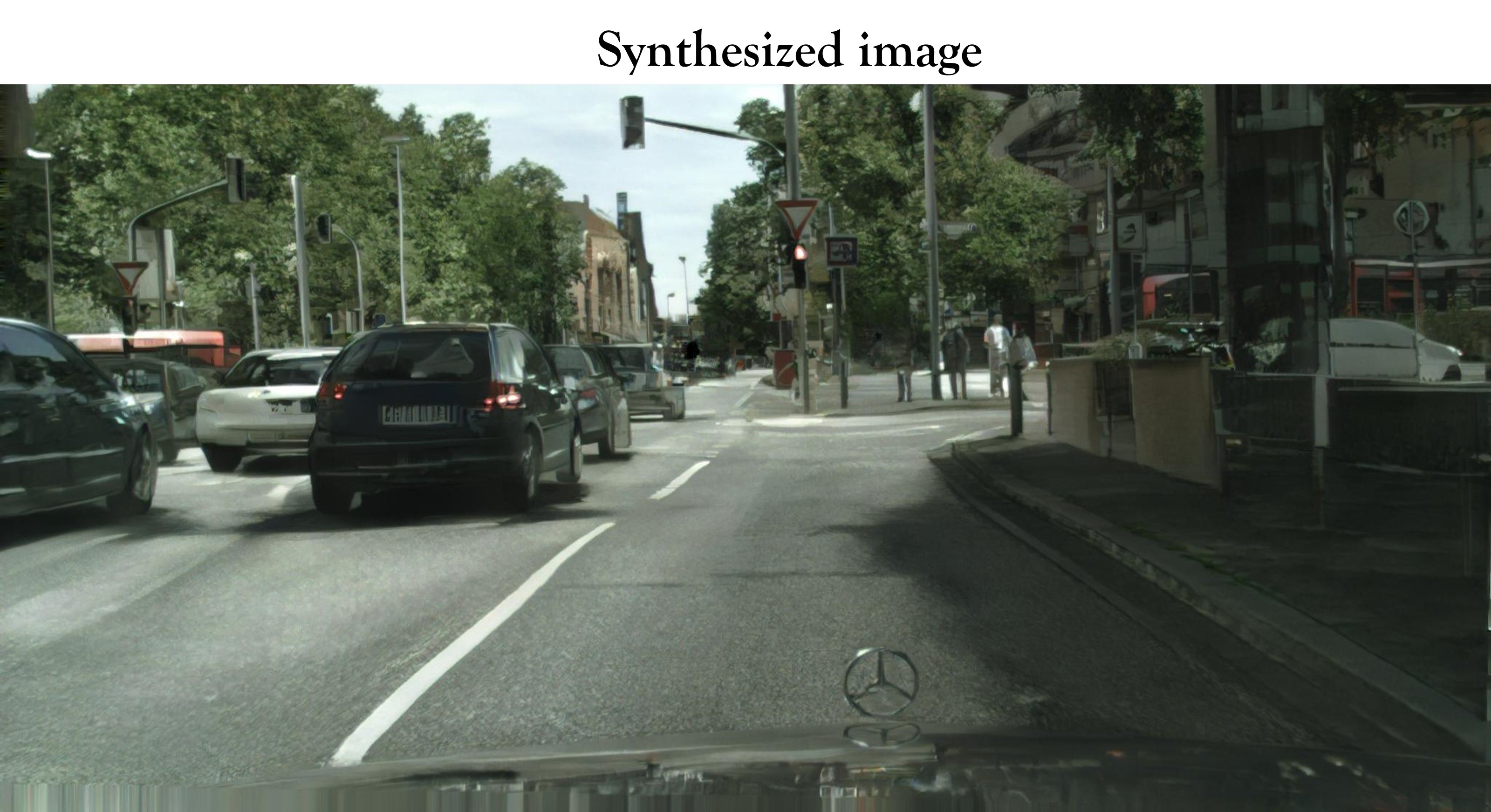

Pytorch implementation of our method for high-resolution (e.g. 2048x1024) photorealistic image-to-image translation. It can be used for turning semantic label maps into photo-realistic images or synthesizing portraits from face label maps.

[High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs](https://tcwang0509.github.io/pix2pixHD/)

[Ting-Chun Wang](https://tcwang0509.github.io/)1, [Ming-Yu Liu](http://mingyuliu.net/)1, [Jun-Yan Zhu](http://people.eecs.berkeley.edu/~junyanz/)2, Andrew Tao1, [Jan Kautz](http://jankautz.com/)1, [Bryan Catanzaro](http://catanzaro.name/)1

1NVIDIA Corporation, 2UC Berkeley

In arxiv, 2017.

## Image-to-image translation at 2k/1k resolution

- Our label-to-streetview results

- Interactive editing results

- Additional streetview results

- Label-to-face and interactive editing results

- Our editing interface

## Prerequisites

- Linux or macOS

- Python 2 or 3

- NVIDIA GPU (12G or 24G memory) + CUDA cuDNN

## Getting Started

### Installation

- Install PyTorch and dependencies from http://pytorch.org

- Install python libraries [dominate](https://github.com/Knio/dominate).

```bash

pip install dominate

```

- Clone this repo:

```bash

git clone https://github.com/NVIDIA/pix2pixHD

cd pix2pixHD

```

### Testing

- A few example Cityscapes test images are included in the `datasets` folder.

- Please download the pre-trained Cityscapes model from [here](https://drive.google.com/file/d/1h9SykUnuZul7J3Nbms2QGH1wa85nbN2-/view?usp=sharing) (google drive link), and put it under `./checkpoints/label2city_1024p/`

- Test the model (`bash ./scripts/test_1024p.sh`):

```bash

#!./scripts/test_1024p.sh

python test.py --name label2city_1024p --netG local --ngf 32 --resize_or_crop none

```

The test results will be saved to a html file here: `./results/label2city_1024p/test_latest/index.html`.

More example scripts can be found in the `scripts` directory.

### Dataset

- We use the Cityscapes dataset. To train a model on the full dataset, please download it from the [official website](https://www.cityscapes-dataset.com/) (registration required).

After downloading, please put it under the `datasets` folder in the same way the example images are provided.

### Training

- Train a model at 1024 x 512 resolution (`bash ./scripts/train_512p.sh`):

```bash

#!./scripts/train_512p.sh

python train.py --name label2city_512p

```

- To view training results, please checkout intermediate results in `./checkpoints/label2city_512p/web/index.html`.

If you have tensorflow installed, you can see tensorboard logs in `./checkpoints/label2city_512p/logs` by adding `--tf_log` to the training scripts.

### Multi-GPU training

- Train a model using multiple GPUs (`bash ./scripts/train_512p_multigpu.sh`):

```bash

#!./scripts/train_512p_multigpu.sh

python train.py --name label2city_512p --batchSize 8 --gpu_ids 0,1,2,3,4,5,6,7

```

Note: this is not tested and we trained our model using single GPU only. Please use at your own discretion.

### Training at full resolution

- To train the images at full resolution (2048 x 1024) requires a GPU with 24G memory (`bash ./scripts/train_1024p_24G.sh`).

If only GPUs with 12G memory are available, please use the 12G script (`bash ./scripts/train_1024p_12G.sh`), which will crop the images during training. Performance is not guaranteed using this script.

## More Training/test Details

- Flags: see `options/train_options.py` and `options/base_options.py` for all the training flags; see `options/test_options.py` and `options/base_options.py` for all the test flags.

- Instance map: we take in both label maps and instance maps as input. If you don't want to use instance maps, please specify the flag `--no_instance`.

## Citation

If you find this useful for your research, please use the following.

```

@article{wang2017highres,

title={High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs},

author={Ting-Chun Wang and Ming-Yu Liu and Jun-Yan Zhu and Andrew Tao and Jan Kautz and Bryan Catanzaro},

journal={arXiv preprint arXiv:1711.11585},

year={2017}

}

```

## Acknowledgments

This code borrows heavily from [pytorch-CycleGAN-and-pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix).