# CycleGAN and pix2pix in PyTorch

This is our ongoing PyTorch implementation for both unpaired and paired image-to-image translation. Check out the original [CycleGAN Torch](https://github.com/junyanz/CycleGAN) and [pix2pix Torch](https://github.com/phillipi/pix2pix) if you would like to reproduce the exact same results in the paper.

The code was written by [Jun-Yan Zhu](https://github.com/junyanz) and [Taesung Park](https://github.com/taesung89).

#### CycleGAN: [[Project]](https://junyanz.github.io/CycleGAN/) [[Paper]](https://arxiv.org/pdf/1703.10593.pdf) [[Torch]](https://github.com/junyanz/CycleGAN)

#### Pix2pix: [[Project]](https://phillipi.github.io/pix2pix/) [[Paper]](https://arxiv.org/pdf/1611.07004v1.pdf) [[Torch]](https://github.com/phillipi/pix2pix)

#### Pix2pix: [[Project]](https://phillipi.github.io/pix2pix/) [[Paper]](https://arxiv.org/pdf/1611.07004v1.pdf) [[Torch]](https://github.com/phillipi/pix2pix)

#### [[EdgesCats Demo]](https://affinelayer.com/pixsrv/) [[pix2pix-tensorflow]](https://github.com/affinelayer/pix2pix-tensorflow)

Written by [Christopher Hesse](https://twitter.com/christophrhesse)

#### [[EdgesCats Demo]](https://affinelayer.com/pixsrv/) [[pix2pix-tensorflow]](https://github.com/affinelayer/pix2pix-tensorflow)

Written by [Christopher Hesse](https://twitter.com/christophrhesse)

If you use this code for your research, please cite:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](http://web.mit.edu/phillipi/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In arxiv, 2017. (* equal contributions)

Image-to-Image Translation Using Conditional Adversarial Networks

[Phillip Isola](http://web.mit.edu/phillipi/), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In CVPR 2017.

## Prerequisites

- Linux or OSX.

- Python 2 or Python 3.

- CPU or NVIDIA GPU + CUDA CuDNN.

## Getting Started

### Installation

- Install PyTorch and dependencies from http://pytorch.org/

- Install python libraries [dominate](https://github.com/Knio/dominate).

- Clone this repo:

```bash

git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

cd pytorch-CycleGAN-and-pix2pix

```

### CycleGAN train/test

- Download a CycleGAN dataset (e.g. maps):

```bash

bash ./datasets/download_cyclegan_dataset.sh maps

```

- Train a model:

```bash

python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

```

To view results as the model trains, check out the html file `./checkpoints/maps_cyclegan/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --phase test

```

The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

### pix2pix train/test

- Download a pix2pix dataset (e.g.facades):

```bash

bash ./datasets/download_pix2pix_dataset.sh facades

```

- Train a model:

```bash

python train.py --dataroot ./datasets/facades --name facades_pix2pix --gpu_ids 0 --model pix2pix --align_data --which_direction BtoA

```

To view results as the model trains, check out the html file `./checkpoints/facades_pix2pix/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --phase val --align_data --which_direction BtoA

```

The test results will be saved to a html file here: `./results/facades_pix2pix/latest_val/index.html`.

More example scripts can be found at `scripts` directory.

## Training/test Details

- See `options/train_options.py` and `options/base_options.py` for training flags; see `optoins/test_options.py` and `options/base_options.py` for test flags.

- CPU/GPU: Set `--gpu_ids -1` to use CPU mode; set `--gpu_ids 0,1,2` for multi-GPU mode.

- During training, you can visualize the result of current training. If you set `--display_id 0`, we will periodically save the training results to `[opt.checkpoints_dir]/[opt.name]/web/`. If you set `--display_id` > 0, the results will be shown on a local graphics web server launched by [szym/display: a lightweight display server for Torch](https://github.com/szym/display). To do this, you should have Torch, Python 3, and the display package installed. You need to invoke `th -ldisplay.start 8000 0.0.0.0` to start the server.

### CycleGAN Datasets

Download the CycleGAN datasets using the following script:

```bash

bash ./datasets/download_cyclegan_dataset.sh dataset_name

```

- `facades`: 400 images from the [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps.

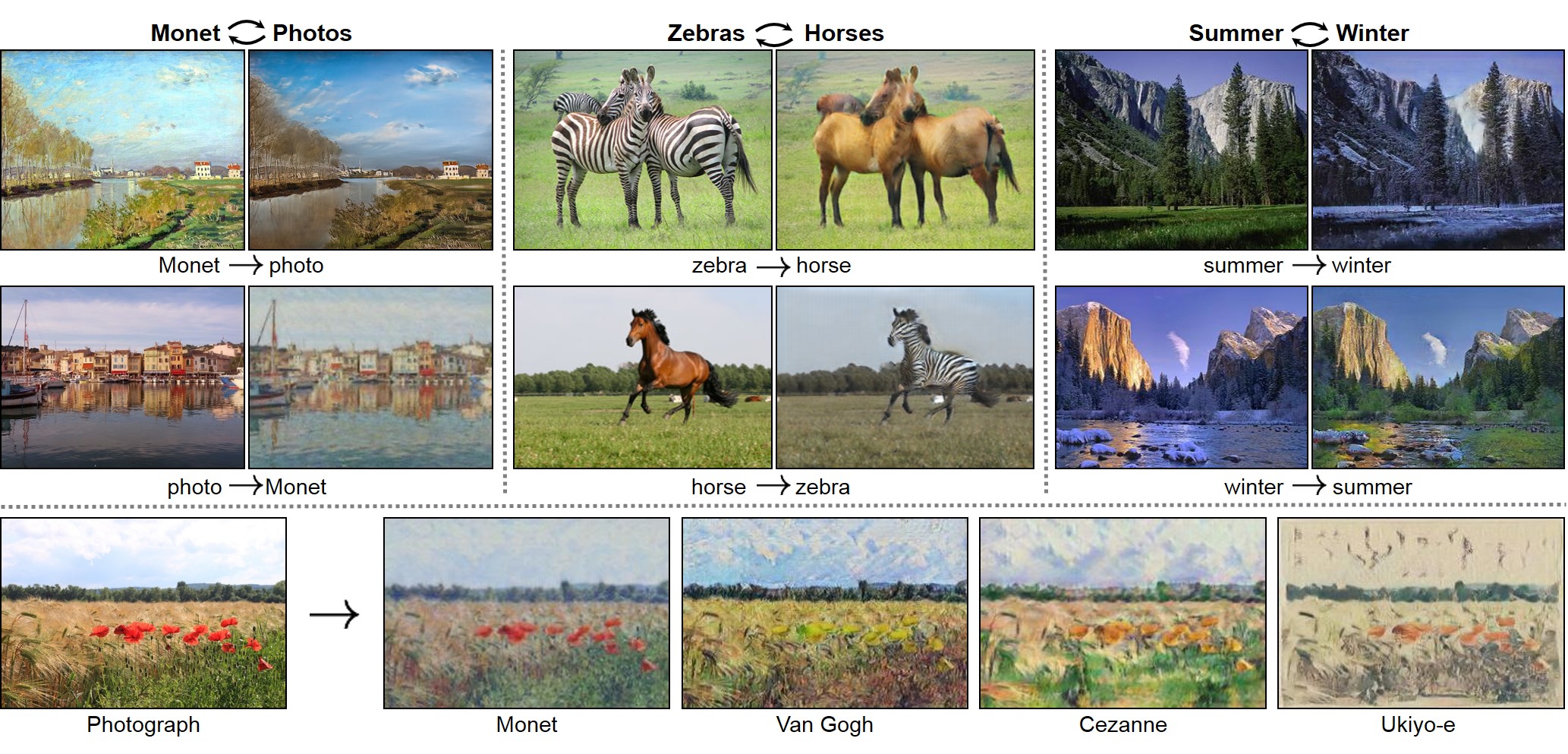

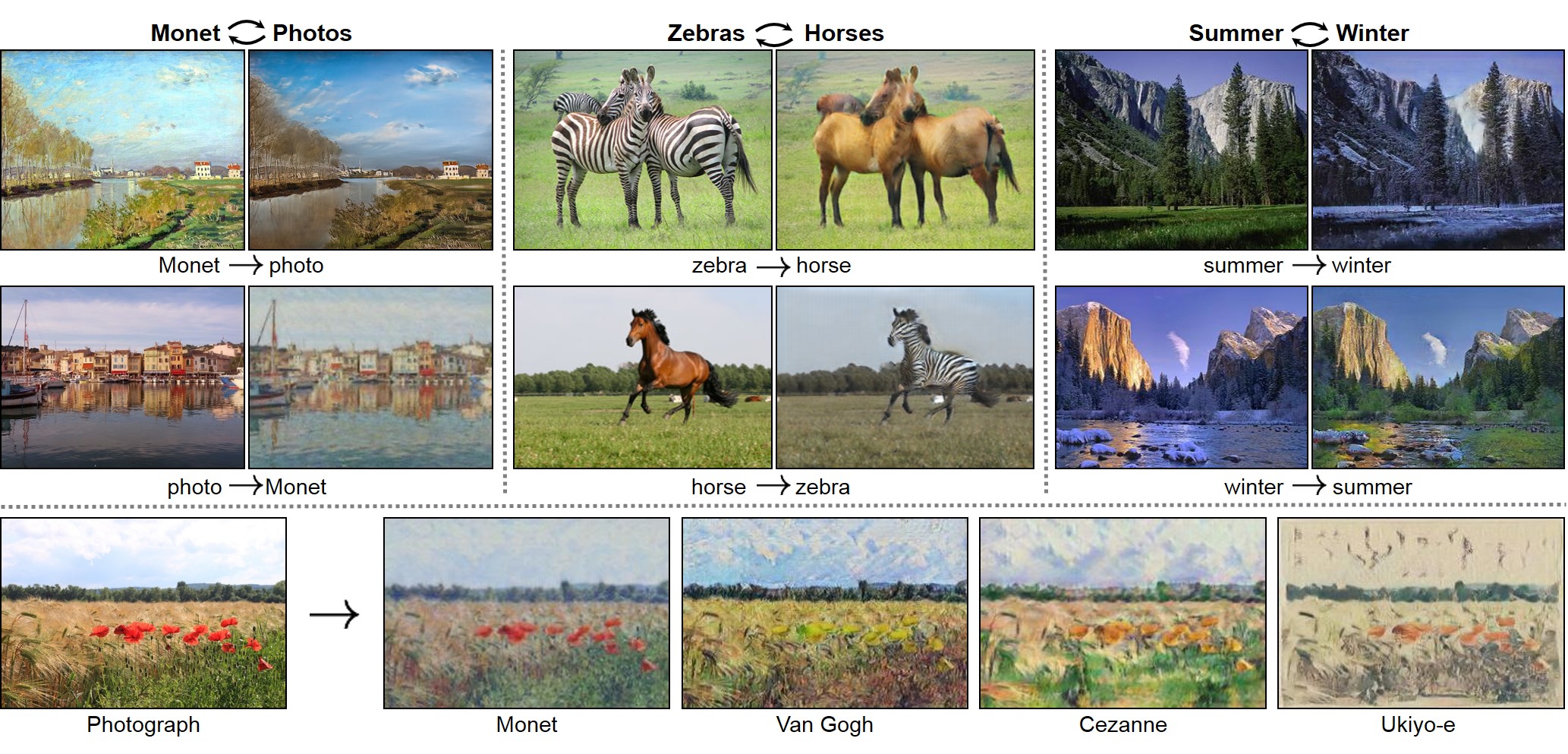

- `horse2zebra`: 939 horse images and 1177 zebra images downloaded from [ImageNet](http://www.image-net.org/) using keywords `wild horse` and `zebra`

- `apple2orange`: 996 apple images and 1020 orange images downloaded from [ImageNet](http://www.image-net.org/) using keywords `apple` and `navel orange`.

- `summer2winter_yosemite`: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.

- `monet2photo`, `vangogh2photo`, `ukiyoe2photo`, `cezanne2photo`: The art images were downloaded from [Wikiart](https://www.wikiart.org/). The real photos are downloaded from Flickr using combination of tags *landscape* and *landscapephotography*. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.

- `iphone2dslr_flower`: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

To train a model on your own datasets, you need to create a data folder with two subdirectories `trainA` and `trainB` that contain images from domain A and B. You can test your model on your training set by setting ``phase='train'`` in `test.lua`. You can also create subdirectories like `testA` and `testB` if you have additional test data.

You should **not** expect our method to work on any combination of two random datasets (e.g. `cats<->keyboards`). From our experiments, we find it works better if two datasets share similar visual content. For example, `landscape painting<->landscape photographs` works much better than `portrait painting <-> landscape photographs`. `zebras<->horses` achieves compelling results while `cats<->dogs` completely fails. See the following section for more discussion.

### pix2pix datasets

Download the pix2pix datasets using the following script:

```bash

bash ./datasets/download_pix2pix_dataset.sh dataset_name

```

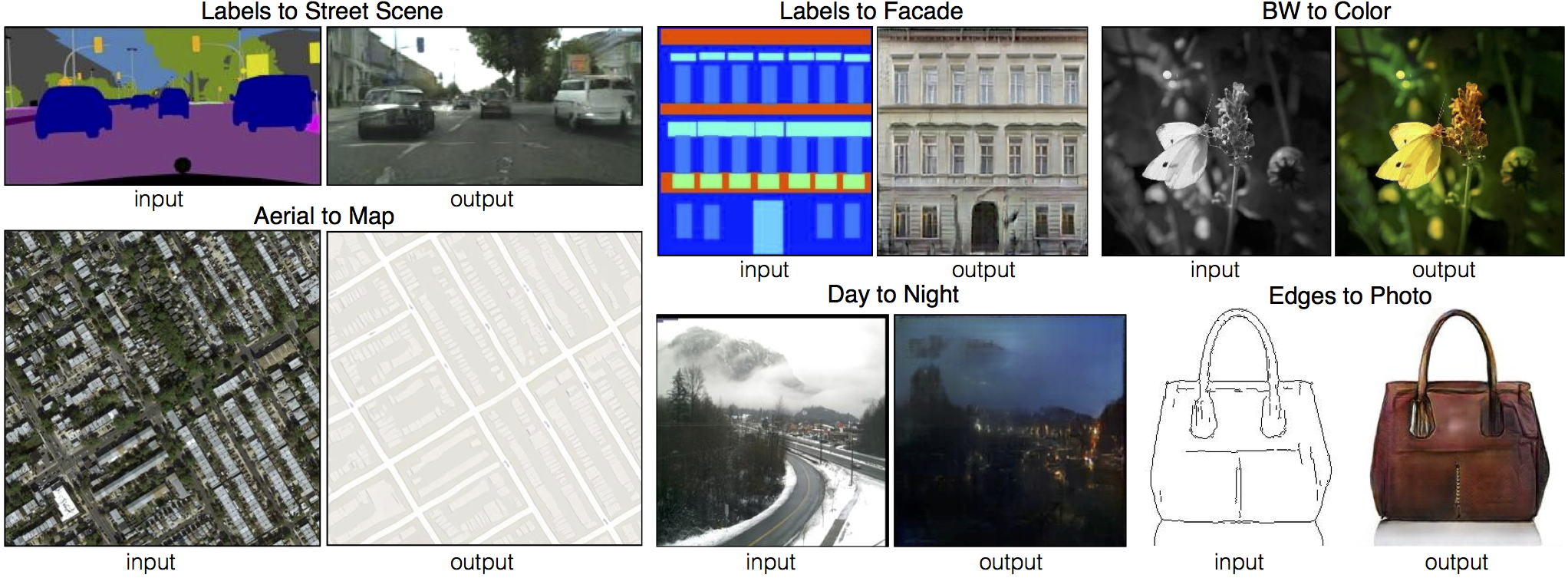

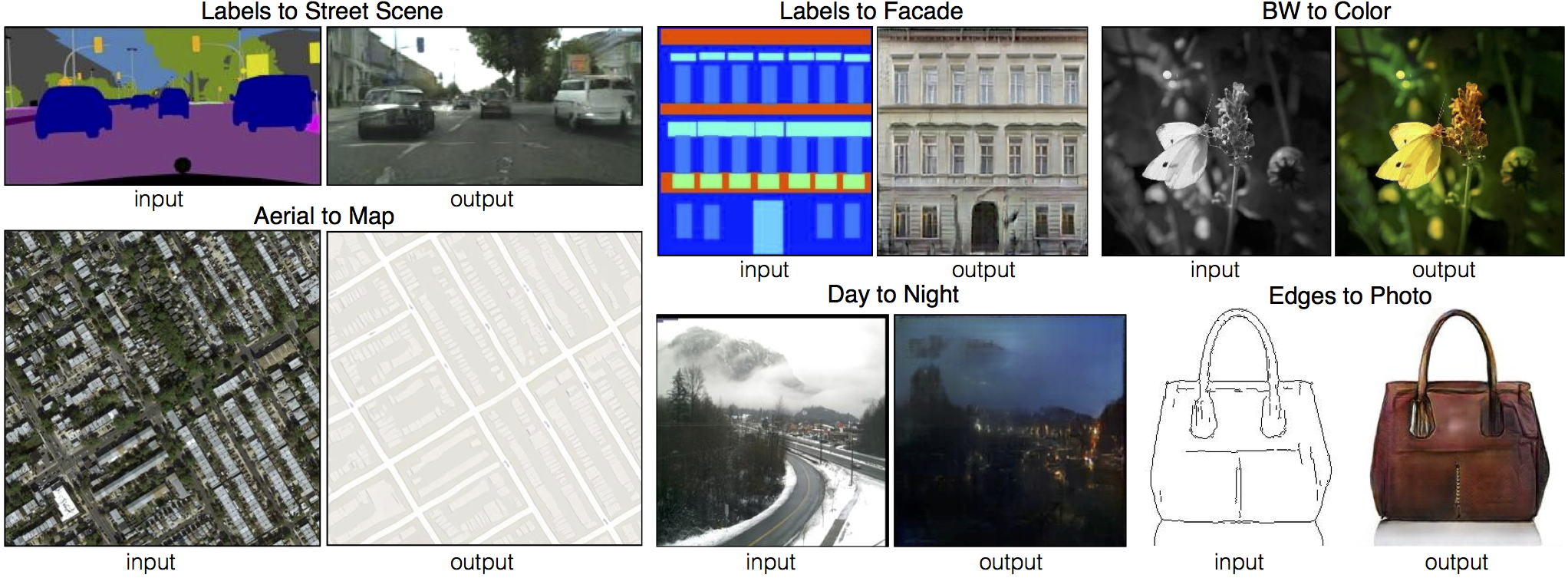

- `facades`: 400 images from [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps

- `edges2shoes`: 50k training images from [UT Zappos50K dataset](http://vision.cs.utexas.edu/projects/finegrained/utzap50k/). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

- `edges2handbags`: 137K Amazon Handbag images from [iGAN project](https://github.com/junyanz/iGAN). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

We provide a python script to generate pix2pix training data in the form of pairs of images {A,B}, where A and B are two different depictions of the same underlying scene. For example, these might be pairs {label map, photo} or {bw image, color image}. Then we can learn to translate A to B or B to A:

Create folder `/path/to/data` with subfolders `A` and `B`. `A` and `B` should each have their own subfolders `train`, `val`, `test`, etc. In `/path/to/data/A/train`, put training images in style A. In `/path/to/data/B/train`, put the corresponding images in style B. Repeat same for other data splits (`val`, `test`, etc).

Corresponding images in a pair {A,B} must be the same size and have the same filename, e.g. `/path/to/data/A/train/1.jpg` is considered to correspond to `/path/to/data/B/train/1.jpg`.

Once the data is formatted this way, call:

```bash

python datasets/combine_A_and_B.py --fold_A /path/to/data/A --fold_B /path/to/data/B --fold_AB /path/to/data

```

This will combine each pair of images (A,B) into a single image file, ready for training.

## TODO

- add reflection and other padding layers.

- add one-direction test model.

- fully test Unet architecture.

- fully test instance normalization layer from [fast-neural-style project](https://github.com/darkstar112358/fast-neural-style).

- fully test CPU mode and multi-GPU mode.

## Related Projects:

[CycleGAN](https://github.com/junyanz/CycleGAN): Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[pix2pix](https://github.com/phillipi/pix2pix): Image-to-image translation using conditional adversarial nets

[iGAN](https://github.com/junyanz/iGAN): Interactive Image Generation via Generative Adversarial Networks

## Cat Paper Collection

If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper Collection:

[[Github]](https://github.com/junyanz/CatPapers) [[Webpage]](http://people.eecs.berkeley.edu/~junyanz/cat/cat_papers.html)

## Acknowledgments

Code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).

If you use this code for your research, please cite:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](http://web.mit.edu/phillipi/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In arxiv, 2017. (* equal contributions)

Image-to-Image Translation Using Conditional Adversarial Networks

[Phillip Isola](http://web.mit.edu/phillipi/), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In CVPR 2017.

## Prerequisites

- Linux or OSX.

- Python 2 or Python 3.

- CPU or NVIDIA GPU + CUDA CuDNN.

## Getting Started

### Installation

- Install PyTorch and dependencies from http://pytorch.org/

- Install python libraries [dominate](https://github.com/Knio/dominate).

- Clone this repo:

```bash

git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

cd pytorch-CycleGAN-and-pix2pix

```

### CycleGAN train/test

- Download a CycleGAN dataset (e.g. maps):

```bash

bash ./datasets/download_cyclegan_dataset.sh maps

```

- Train a model:

```bash

python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

```

To view results as the model trains, check out the html file `./checkpoints/maps_cyclegan/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --phase test

```

The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

### pix2pix train/test

- Download a pix2pix dataset (e.g.facades):

```bash

bash ./datasets/download_pix2pix_dataset.sh facades

```

- Train a model:

```bash

python train.py --dataroot ./datasets/facades --name facades_pix2pix --gpu_ids 0 --model pix2pix --align_data --which_direction BtoA

```

To view results as the model trains, check out the html file `./checkpoints/facades_pix2pix/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --phase val --align_data --which_direction BtoA

```

The test results will be saved to a html file here: `./results/facades_pix2pix/latest_val/index.html`.

More example scripts can be found at `scripts` directory.

## Training/test Details

- See `options/train_options.py` and `options/base_options.py` for training flags; see `optoins/test_options.py` and `options/base_options.py` for test flags.

- CPU/GPU: Set `--gpu_ids -1` to use CPU mode; set `--gpu_ids 0,1,2` for multi-GPU mode.

- During training, you can visualize the result of current training. If you set `--display_id 0`, we will periodically save the training results to `[opt.checkpoints_dir]/[opt.name]/web/`. If you set `--display_id` > 0, the results will be shown on a local graphics web server launched by [szym/display: a lightweight display server for Torch](https://github.com/szym/display). To do this, you should have Torch, Python 3, and the display package installed. You need to invoke `th -ldisplay.start 8000 0.0.0.0` to start the server.

### CycleGAN Datasets

Download the CycleGAN datasets using the following script:

```bash

bash ./datasets/download_cyclegan_dataset.sh dataset_name

```

- `facades`: 400 images from the [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps.

- `horse2zebra`: 939 horse images and 1177 zebra images downloaded from [ImageNet](http://www.image-net.org/) using keywords `wild horse` and `zebra`

- `apple2orange`: 996 apple images and 1020 orange images downloaded from [ImageNet](http://www.image-net.org/) using keywords `apple` and `navel orange`.

- `summer2winter_yosemite`: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.

- `monet2photo`, `vangogh2photo`, `ukiyoe2photo`, `cezanne2photo`: The art images were downloaded from [Wikiart](https://www.wikiart.org/). The real photos are downloaded from Flickr using combination of tags *landscape* and *landscapephotography*. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.

- `iphone2dslr_flower`: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

To train a model on your own datasets, you need to create a data folder with two subdirectories `trainA` and `trainB` that contain images from domain A and B. You can test your model on your training set by setting ``phase='train'`` in `test.lua`. You can also create subdirectories like `testA` and `testB` if you have additional test data.

You should **not** expect our method to work on any combination of two random datasets (e.g. `cats<->keyboards`). From our experiments, we find it works better if two datasets share similar visual content. For example, `landscape painting<->landscape photographs` works much better than `portrait painting <-> landscape photographs`. `zebras<->horses` achieves compelling results while `cats<->dogs` completely fails. See the following section for more discussion.

### pix2pix datasets

Download the pix2pix datasets using the following script:

```bash

bash ./datasets/download_pix2pix_dataset.sh dataset_name

```

- `facades`: 400 images from [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps

- `edges2shoes`: 50k training images from [UT Zappos50K dataset](http://vision.cs.utexas.edu/projects/finegrained/utzap50k/). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

- `edges2handbags`: 137K Amazon Handbag images from [iGAN project](https://github.com/junyanz/iGAN). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

We provide a python script to generate pix2pix training data in the form of pairs of images {A,B}, where A and B are two different depictions of the same underlying scene. For example, these might be pairs {label map, photo} or {bw image, color image}. Then we can learn to translate A to B or B to A:

Create folder `/path/to/data` with subfolders `A` and `B`. `A` and `B` should each have their own subfolders `train`, `val`, `test`, etc. In `/path/to/data/A/train`, put training images in style A. In `/path/to/data/B/train`, put the corresponding images in style B. Repeat same for other data splits (`val`, `test`, etc).

Corresponding images in a pair {A,B} must be the same size and have the same filename, e.g. `/path/to/data/A/train/1.jpg` is considered to correspond to `/path/to/data/B/train/1.jpg`.

Once the data is formatted this way, call:

```bash

python datasets/combine_A_and_B.py --fold_A /path/to/data/A --fold_B /path/to/data/B --fold_AB /path/to/data

```

This will combine each pair of images (A,B) into a single image file, ready for training.

## TODO

- add reflection and other padding layers.

- add one-direction test model.

- fully test Unet architecture.

- fully test instance normalization layer from [fast-neural-style project](https://github.com/darkstar112358/fast-neural-style).

- fully test CPU mode and multi-GPU mode.

## Related Projects:

[CycleGAN](https://github.com/junyanz/CycleGAN): Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[pix2pix](https://github.com/phillipi/pix2pix): Image-to-image translation using conditional adversarial nets

[iGAN](https://github.com/junyanz/iGAN): Interactive Image Generation via Generative Adversarial Networks

## Cat Paper Collection

If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper Collection:

[[Github]](https://github.com/junyanz/CatPapers) [[Webpage]](http://people.eecs.berkeley.edu/~junyanz/cat/cat_papers.html)

## Acknowledgments

Code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).

#### Pix2pix: [[Project]](https://phillipi.github.io/pix2pix/) [[Paper]](https://arxiv.org/pdf/1611.07004v1.pdf) [[Torch]](https://github.com/phillipi/pix2pix)

#### Pix2pix: [[Project]](https://phillipi.github.io/pix2pix/) [[Paper]](https://arxiv.org/pdf/1611.07004v1.pdf) [[Torch]](https://github.com/phillipi/pix2pix)

#### [[EdgesCats Demo]](https://affinelayer.com/pixsrv/) [[pix2pix-tensorflow]](https://github.com/affinelayer/pix2pix-tensorflow)

Written by [Christopher Hesse](https://twitter.com/christophrhesse)

#### [[EdgesCats Demo]](https://affinelayer.com/pixsrv/) [[pix2pix-tensorflow]](https://github.com/affinelayer/pix2pix-tensorflow)

Written by [Christopher Hesse](https://twitter.com/christophrhesse)

If you use this code for your research, please cite:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](http://web.mit.edu/phillipi/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In arxiv, 2017. (* equal contributions)

Image-to-Image Translation Using Conditional Adversarial Networks

[Phillip Isola](http://web.mit.edu/phillipi/), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In CVPR 2017.

## Prerequisites

- Linux or OSX.

- Python 2 or Python 3.

- CPU or NVIDIA GPU + CUDA CuDNN.

## Getting Started

### Installation

- Install PyTorch and dependencies from http://pytorch.org/

- Install python libraries [dominate](https://github.com/Knio/dominate).

- Clone this repo:

```bash

git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

cd pytorch-CycleGAN-and-pix2pix

```

### CycleGAN train/test

- Download a CycleGAN dataset (e.g. maps):

```bash

bash ./datasets/download_cyclegan_dataset.sh maps

```

- Train a model:

```bash

python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

```

To view results as the model trains, check out the html file `./checkpoints/maps_cyclegan/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --phase test

```

The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

### pix2pix train/test

- Download a pix2pix dataset (e.g.facades):

```bash

bash ./datasets/download_pix2pix_dataset.sh facades

```

- Train a model:

```bash

python train.py --dataroot ./datasets/facades --name facades_pix2pix --gpu_ids 0 --model pix2pix --align_data --which_direction BtoA

```

To view results as the model trains, check out the html file `./checkpoints/facades_pix2pix/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --phase val --align_data --which_direction BtoA

```

The test results will be saved to a html file here: `./results/facades_pix2pix/latest_val/index.html`.

More example scripts can be found at `scripts` directory.

## Training/test Details

- See `options/train_options.py` and `options/base_options.py` for training flags; see `optoins/test_options.py` and `options/base_options.py` for test flags.

- CPU/GPU: Set `--gpu_ids -1` to use CPU mode; set `--gpu_ids 0,1,2` for multi-GPU mode.

- During training, you can visualize the result of current training. If you set `--display_id 0`, we will periodically save the training results to `[opt.checkpoints_dir]/[opt.name]/web/`. If you set `--display_id` > 0, the results will be shown on a local graphics web server launched by [szym/display: a lightweight display server for Torch](https://github.com/szym/display). To do this, you should have Torch, Python 3, and the display package installed. You need to invoke `th -ldisplay.start 8000 0.0.0.0` to start the server.

### CycleGAN Datasets

Download the CycleGAN datasets using the following script:

```bash

bash ./datasets/download_cyclegan_dataset.sh dataset_name

```

- `facades`: 400 images from the [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps.

- `horse2zebra`: 939 horse images and 1177 zebra images downloaded from [ImageNet](http://www.image-net.org/) using keywords `wild horse` and `zebra`

- `apple2orange`: 996 apple images and 1020 orange images downloaded from [ImageNet](http://www.image-net.org/) using keywords `apple` and `navel orange`.

- `summer2winter_yosemite`: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.

- `monet2photo`, `vangogh2photo`, `ukiyoe2photo`, `cezanne2photo`: The art images were downloaded from [Wikiart](https://www.wikiart.org/). The real photos are downloaded from Flickr using combination of tags *landscape* and *landscapephotography*. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.

- `iphone2dslr_flower`: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

To train a model on your own datasets, you need to create a data folder with two subdirectories `trainA` and `trainB` that contain images from domain A and B. You can test your model on your training set by setting ``phase='train'`` in `test.lua`. You can also create subdirectories like `testA` and `testB` if you have additional test data.

You should **not** expect our method to work on any combination of two random datasets (e.g. `cats<->keyboards`). From our experiments, we find it works better if two datasets share similar visual content. For example, `landscape painting<->landscape photographs` works much better than `portrait painting <-> landscape photographs`. `zebras<->horses` achieves compelling results while `cats<->dogs` completely fails. See the following section for more discussion.

### pix2pix datasets

Download the pix2pix datasets using the following script:

```bash

bash ./datasets/download_pix2pix_dataset.sh dataset_name

```

- `facades`: 400 images from [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps

- `edges2shoes`: 50k training images from [UT Zappos50K dataset](http://vision.cs.utexas.edu/projects/finegrained/utzap50k/). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

- `edges2handbags`: 137K Amazon Handbag images from [iGAN project](https://github.com/junyanz/iGAN). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

We provide a python script to generate pix2pix training data in the form of pairs of images {A,B}, where A and B are two different depictions of the same underlying scene. For example, these might be pairs {label map, photo} or {bw image, color image}. Then we can learn to translate A to B or B to A:

Create folder `/path/to/data` with subfolders `A` and `B`. `A` and `B` should each have their own subfolders `train`, `val`, `test`, etc. In `/path/to/data/A/train`, put training images in style A. In `/path/to/data/B/train`, put the corresponding images in style B. Repeat same for other data splits (`val`, `test`, etc).

Corresponding images in a pair {A,B} must be the same size and have the same filename, e.g. `/path/to/data/A/train/1.jpg` is considered to correspond to `/path/to/data/B/train/1.jpg`.

Once the data is formatted this way, call:

```bash

python datasets/combine_A_and_B.py --fold_A /path/to/data/A --fold_B /path/to/data/B --fold_AB /path/to/data

```

This will combine each pair of images (A,B) into a single image file, ready for training.

## TODO

- add reflection and other padding layers.

- add one-direction test model.

- fully test Unet architecture.

- fully test instance normalization layer from [fast-neural-style project](https://github.com/darkstar112358/fast-neural-style).

- fully test CPU mode and multi-GPU mode.

## Related Projects:

[CycleGAN](https://github.com/junyanz/CycleGAN): Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[pix2pix](https://github.com/phillipi/pix2pix): Image-to-image translation using conditional adversarial nets

[iGAN](https://github.com/junyanz/iGAN): Interactive Image Generation via Generative Adversarial Networks

## Cat Paper Collection

If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper Collection:

[[Github]](https://github.com/junyanz/CatPapers) [[Webpage]](http://people.eecs.berkeley.edu/~junyanz/cat/cat_papers.html)

## Acknowledgments

Code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).

If you use this code for your research, please cite:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](http://web.mit.edu/phillipi/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In arxiv, 2017. (* equal contributions)

Image-to-Image Translation Using Conditional Adversarial Networks

[Phillip Isola](http://web.mit.edu/phillipi/), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

In CVPR 2017.

## Prerequisites

- Linux or OSX.

- Python 2 or Python 3.

- CPU or NVIDIA GPU + CUDA CuDNN.

## Getting Started

### Installation

- Install PyTorch and dependencies from http://pytorch.org/

- Install python libraries [dominate](https://github.com/Knio/dominate).

- Clone this repo:

```bash

git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

cd pytorch-CycleGAN-and-pix2pix

```

### CycleGAN train/test

- Download a CycleGAN dataset (e.g. maps):

```bash

bash ./datasets/download_cyclegan_dataset.sh maps

```

- Train a model:

```bash

python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

```

To view results as the model trains, check out the html file `./checkpoints/maps_cyclegan/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --phase test

```

The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

### pix2pix train/test

- Download a pix2pix dataset (e.g.facades):

```bash

bash ./datasets/download_pix2pix_dataset.sh facades

```

- Train a model:

```bash

python train.py --dataroot ./datasets/facades --name facades_pix2pix --gpu_ids 0 --model pix2pix --align_data --which_direction BtoA

```

To view results as the model trains, check out the html file `./checkpoints/facades_pix2pix/web/index.html`

- Test the model:

```bash

python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --phase val --align_data --which_direction BtoA

```

The test results will be saved to a html file here: `./results/facades_pix2pix/latest_val/index.html`.

More example scripts can be found at `scripts` directory.

## Training/test Details

- See `options/train_options.py` and `options/base_options.py` for training flags; see `optoins/test_options.py` and `options/base_options.py` for test flags.

- CPU/GPU: Set `--gpu_ids -1` to use CPU mode; set `--gpu_ids 0,1,2` for multi-GPU mode.

- During training, you can visualize the result of current training. If you set `--display_id 0`, we will periodically save the training results to `[opt.checkpoints_dir]/[opt.name]/web/`. If you set `--display_id` > 0, the results will be shown on a local graphics web server launched by [szym/display: a lightweight display server for Torch](https://github.com/szym/display). To do this, you should have Torch, Python 3, and the display package installed. You need to invoke `th -ldisplay.start 8000 0.0.0.0` to start the server.

### CycleGAN Datasets

Download the CycleGAN datasets using the following script:

```bash

bash ./datasets/download_cyclegan_dataset.sh dataset_name

```

- `facades`: 400 images from the [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps.

- `horse2zebra`: 939 horse images and 1177 zebra images downloaded from [ImageNet](http://www.image-net.org/) using keywords `wild horse` and `zebra`

- `apple2orange`: 996 apple images and 1020 orange images downloaded from [ImageNet](http://www.image-net.org/) using keywords `apple` and `navel orange`.

- `summer2winter_yosemite`: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.

- `monet2photo`, `vangogh2photo`, `ukiyoe2photo`, `cezanne2photo`: The art images were downloaded from [Wikiart](https://www.wikiart.org/). The real photos are downloaded from Flickr using combination of tags *landscape* and *landscapephotography*. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.

- `iphone2dslr_flower`: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

To train a model on your own datasets, you need to create a data folder with two subdirectories `trainA` and `trainB` that contain images from domain A and B. You can test your model on your training set by setting ``phase='train'`` in `test.lua`. You can also create subdirectories like `testA` and `testB` if you have additional test data.

You should **not** expect our method to work on any combination of two random datasets (e.g. `cats<->keyboards`). From our experiments, we find it works better if two datasets share similar visual content. For example, `landscape painting<->landscape photographs` works much better than `portrait painting <-> landscape photographs`. `zebras<->horses` achieves compelling results while `cats<->dogs` completely fails. See the following section for more discussion.

### pix2pix datasets

Download the pix2pix datasets using the following script:

```bash

bash ./datasets/download_pix2pix_dataset.sh dataset_name

```

- `facades`: 400 images from [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade/).

- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com/).

- `maps`: 1096 training images scraped from Google Maps

- `edges2shoes`: 50k training images from [UT Zappos50K dataset](http://vision.cs.utexas.edu/projects/finegrained/utzap50k/). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

- `edges2handbags`: 137K Amazon Handbag images from [iGAN project](https://github.com/junyanz/iGAN). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing.

We provide a python script to generate pix2pix training data in the form of pairs of images {A,B}, where A and B are two different depictions of the same underlying scene. For example, these might be pairs {label map, photo} or {bw image, color image}. Then we can learn to translate A to B or B to A:

Create folder `/path/to/data` with subfolders `A` and `B`. `A` and `B` should each have their own subfolders `train`, `val`, `test`, etc. In `/path/to/data/A/train`, put training images in style A. In `/path/to/data/B/train`, put the corresponding images in style B. Repeat same for other data splits (`val`, `test`, etc).

Corresponding images in a pair {A,B} must be the same size and have the same filename, e.g. `/path/to/data/A/train/1.jpg` is considered to correspond to `/path/to/data/B/train/1.jpg`.

Once the data is formatted this way, call:

```bash

python datasets/combine_A_and_B.py --fold_A /path/to/data/A --fold_B /path/to/data/B --fold_AB /path/to/data

```

This will combine each pair of images (A,B) into a single image file, ready for training.

## TODO

- add reflection and other padding layers.

- add one-direction test model.

- fully test Unet architecture.

- fully test instance normalization layer from [fast-neural-style project](https://github.com/darkstar112358/fast-neural-style).

- fully test CPU mode and multi-GPU mode.

## Related Projects:

[CycleGAN](https://github.com/junyanz/CycleGAN): Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[pix2pix](https://github.com/phillipi/pix2pix): Image-to-image translation using conditional adversarial nets

[iGAN](https://github.com/junyanz/iGAN): Interactive Image Generation via Generative Adversarial Networks

## Cat Paper Collection

If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper Collection:

[[Github]](https://github.com/junyanz/CatPapers) [[Webpage]](http://people.eecs.berkeley.edu/~junyanz/cat/cat_papers.html)

## Acknowledgments

Code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).