------------

status: published

title: Duke MTMC

desc: Duke MTMC is a dataset of surveillance camera footage of students on Duke University campus

subdesc: Duke MTMC contains over 2 million video frames and 2,000 unique identities collected from 8 HD cameras at Duke University campus in March 2014

slug: duke_mtmc

cssclass: dataset

image: assets/background.jpg

published: 2019-2-23

updated: 2019-2-23

authors: Adam Harvey

------------

### sidebar

### end sidebar

## Duke MTMC

[ page under development ]

The Duke Multi-Target, Multi-Camera Tracking Dataset (MTMC) is a dataset of video recorded on Duke University campus for research and development of networked camera surveillance systems. MTMC tracking is used for citywide dragnet surveillance systems such as those used throughout China by SenseTime[^sensetime_qz] and the oppressive monitoring of 2.5 million Uyghurs in Xinjiang by SenseNets[^sensenets_uyghurs]. In fact researchers from both SenseTime[^sensetime1] [^sensetime2] and SenseNets[^sensenets_sensetime] used the Duke MTMC dataset for their research.

The Duke MTMC dataset is unique because it is the largest publicly available MTMC and person re-identification dataset and has the longest duration of annotated video. In total, the Duke MTMC dataset provides over 14 hours of 1080p video from 8 synchronized surveillance cameras.[^duke_mtmc_orig] It is among the most widely used person re-identification datasets in the world. The approximately 2,700 unique people in the Duke MTMC videos, most of whom are students, are used for research and development of surveillance technologies by commercial, academic, and even defense organizations.

The creation and publication of the Duke MTMC dataset in 2016 was originally funded by the U.S. Army Research Laboratory and the National Science Foundation[^duke_mtmc_orig]. Since 2016 use of the Duke MTMC dataset images have been publicly acknowledged in research funded by or on behalf of the Chinese National University of Defense[^cn_defense1][^cn_defense2], IARPA and IBM[^iarpa_ibm], and U.S. Department of Homeland Security[^us_dhs].

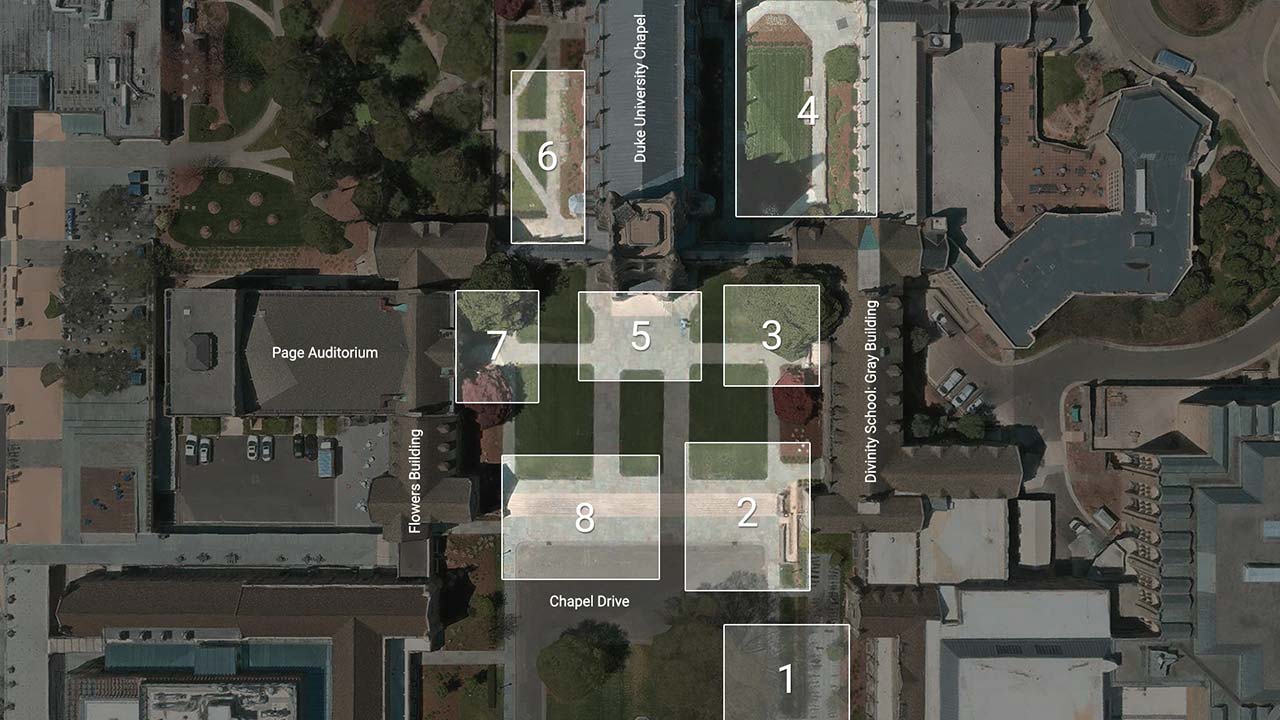

The 8 cameras deployed on Duke's campus were specifically setup to capture students "during periods between lectures, when pedestrian traffic is heavy".[^duke_mtmc_orig] Camera 7 and 2 capture large groups of prospective students and children. Camera 5 was positioned to capture students as they enter and exit Duke University's main chapel. Each camera's location is documented below.

{% include 'dashboard.html' %}

{% include 'supplementary_header.html' %}

### Notes

The Duke MTMC dataset paper mentions 2,700 identities, but their ground truth file only lists annotations for 1,812

### Footnotes

[^sensetime_qz]:

[^sensenets_uyghurs]:

[^sensenets_sensetime]: "Attention-Aware Compositional Network for Person Re-identification". 2018. [Source](https://www.semanticscholar.org/paper/Attention-Aware-Compositional-Network-for-Person-Xu-Zhao/14ce502bc19b225466126b256511f9c05cadcb6e)

[^sensetime1]: "End-to-End Deep Kronecker-Product Matching for Person Re-identification". 2018. [source](https://www.semanticscholar.org/paper/End-to-End-Deep-Kronecker-Product-Matching-for-Shen-Xiao/947954cafdefd471b75da8c3bb4c21b9e6d57838)

[^sensetime2]: "Person Re-identification with Deep Similarity-Guided Graph Neural Network". 2018. [Source](https://www.semanticscholar.org/paper/Person-Re-identification-with-Deep-Graph-Neural-Shen-Li/08d2a558ea2deb117dd8066e864612bf2899905b)

[^duke_mtmc_orig]: "Performance Measures and a Data Set for

Multi-Target, Multi-Camera Tracking". 2016. [Source](https://www.semanticscholar.org/paper/Performance-Measures-and-a-Data-Set-for-Tracking-Ristani-Solera/27a2fad58dd8727e280f97036e0d2bc55ef5424c)

[^cn_defense1]: "Tracking by Animation: Unsupervised Learning of Multi-Object Attentive Trackers". 2018. [Source](https://www.semanticscholar.org/paper/Tracking-by-Animation%3A-Unsupervised-Learning-of-He-Liu/e90816e1a0e14ea1e7039e0b2782260999aef786)

[^cn_defense2]: "Unsupervised Multi-Object Detection for Video Surveillance Using Memory-Based Recurrent Attention Networks". 2018. [Source](https://www.semanticscholar.org/paper/Unsupervised-Multi-Object-Detection-for-Video-Using-He-He/59f357015054bab43fb8cbfd3f3dbf17b1d1f881)

[^iarpa_ibm]: "Horizontal Pyramid Matching for Person Re-identification". 2019. [Source](https://www.semanticscholar.org/paper/Horizontal-Pyramid-Matching-for-Person-Fu-Wei/c2a5f27d97744bc1f96d7e1074395749e3c59bc8)

[^us_dhs]: "Re-Identification with Consistent Attentive Siamese Networks". 2018. [Source](https://www.semanticscholar.org/paper/Re-Identification-with-Consistent-Attentive-Siamese-Zheng-Karanam/24d6d3adf2176516ef0de2e943ce2084e27c4f94)